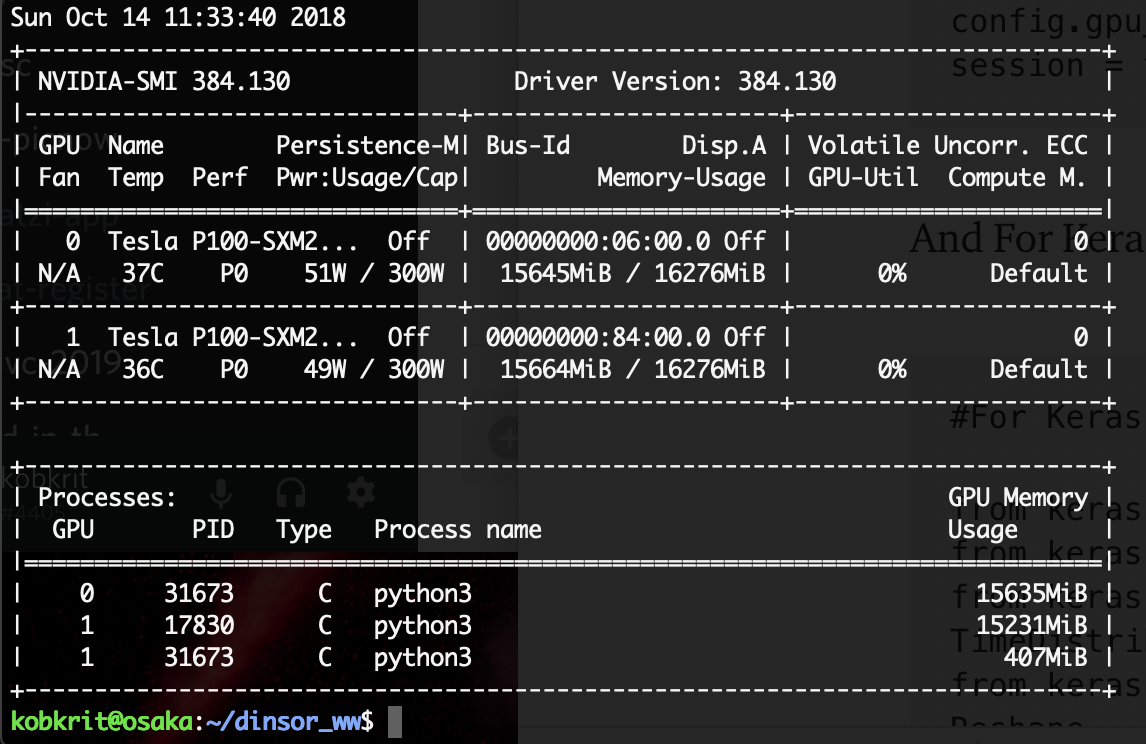

Towards Efficient Multi-GPU Training in Keras with TensorFlow | by Bohumír Zámečník | Rossum | Medium

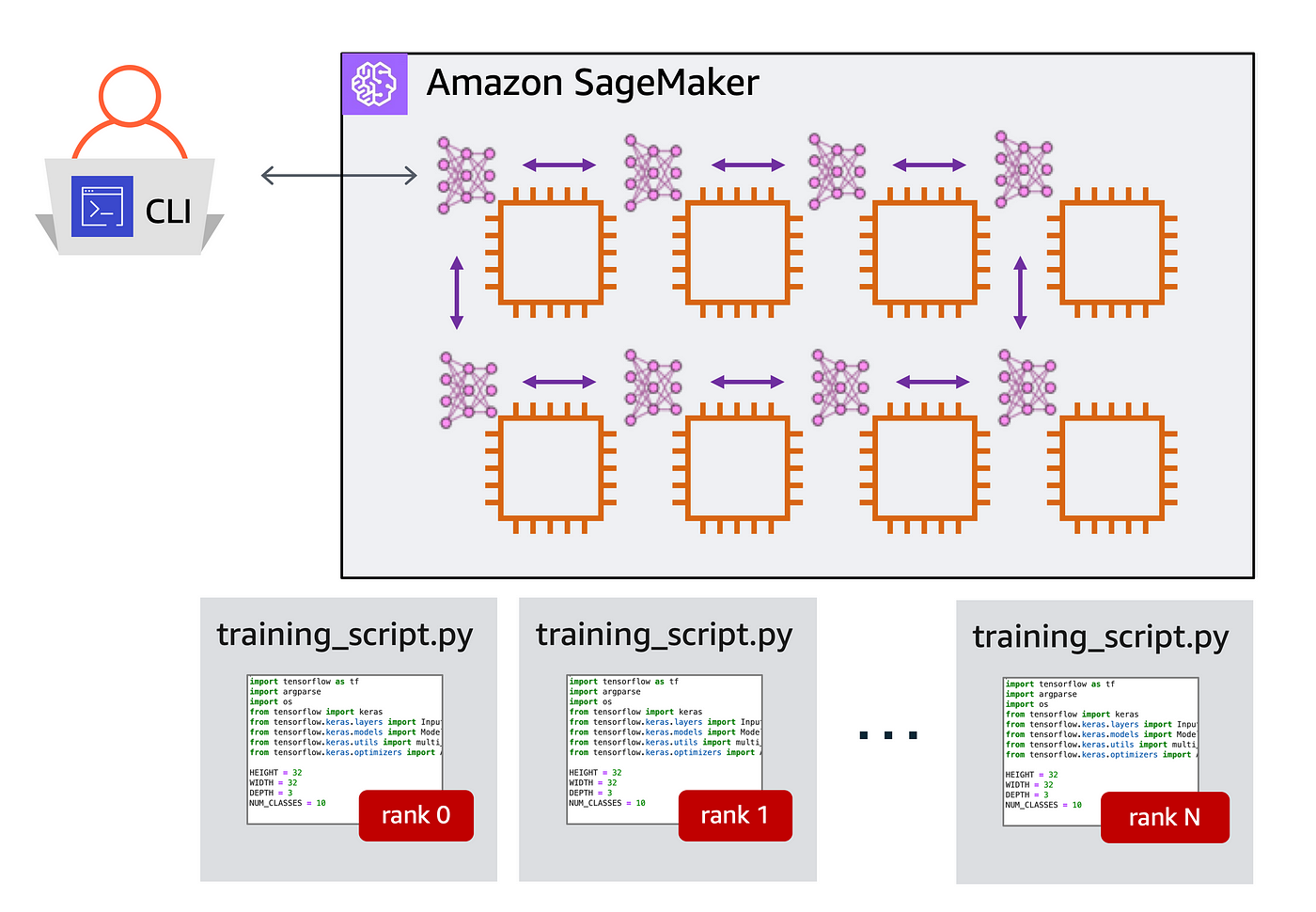

Multi-GPU distributed deep learning training at scale with Ubuntu18 DLAMI, EFA on P3dn instances, and Amazon FSx for Lustre | AWS Machine Learning Blog

A quick guide to distributed training with TensorFlow and Horovod on Amazon SageMaker | by Shashank Prasanna | Towards Data Science

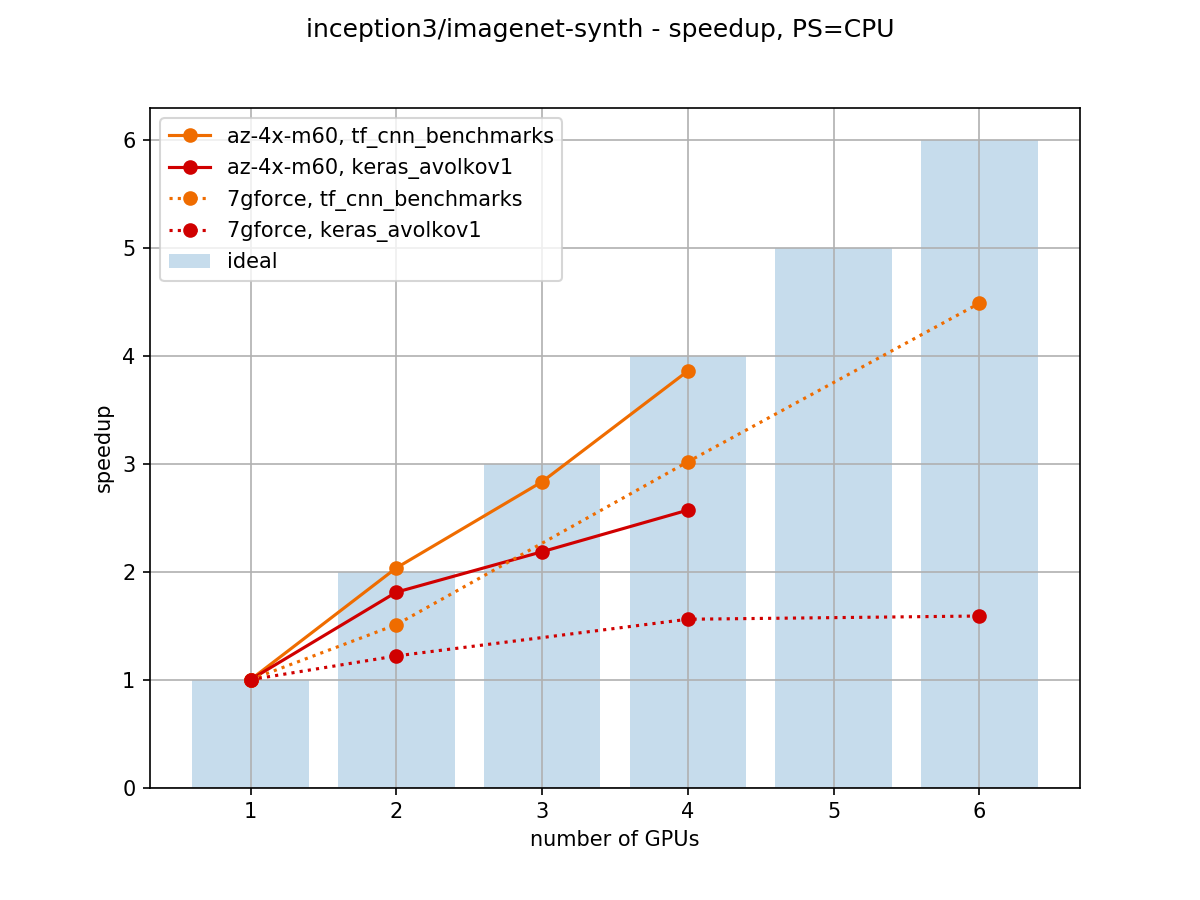

Towards Efficient Multi-GPU Training in Keras with TensorFlow | by Bohumír Zámečník | Rossum | Medium

François Chollet on Twitter: "Tweetorial: high-performance multi-GPU training with Keras. The only thing you need to do to turn single-device code into multi-device code is to place your model construction function under